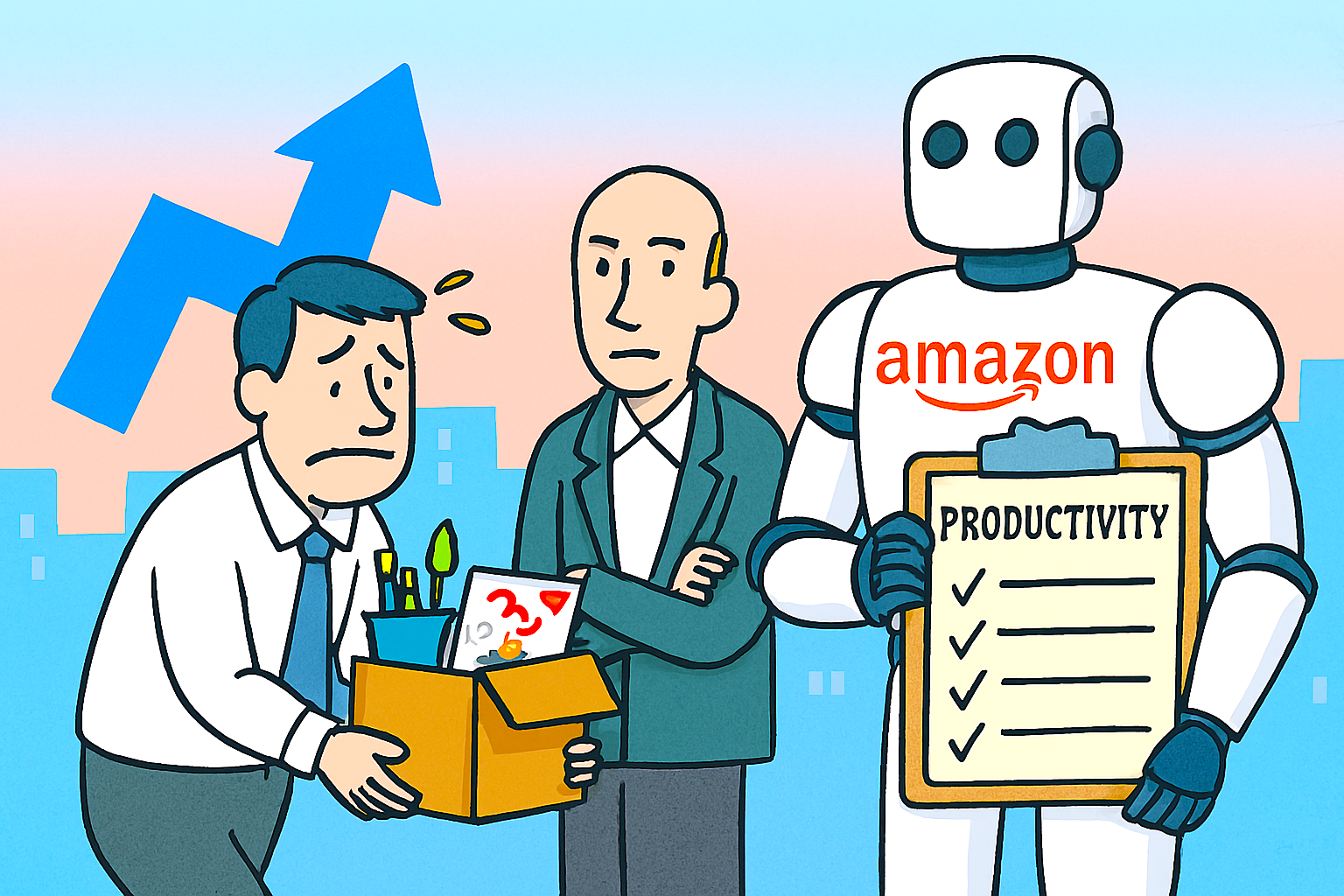

Amazon has reportedly announced its largest corporate restructuring to date, with plans to lay off up to 30,000 white-collar employees.

This represents nearly 10% of its global office workforce—as it accelerates its transition toward artificial intelligence and automation-led operations.

The move, confirmed on 28th October 2025, marks a dramatic shift in the tech giant’s internal priorities.

CEO Andy Jassy has framed the layoffs as part of a broader effort to streamline management. The company appears to want to eliminate bureaucratic inefficiencies and reallocate resources toward AI infrastructure.

‘We will need fewer people doing some of the jobs that are being done today, and more people doing other types of jobs’, Jassy is reported as saying.

Affected departments span human resources, logistics, customer service, and Amazon Web Services (AWS). Many roles are deemed redundant due to AI integration.

Heavy investment

The company has been investing heavily in machine learning systems. These are capable of handling tasks ranging from inventory forecasting to customer support. This approach has prompted the reevaluation of traditional staffing models.

While Amazon employs over 1.5 million people globally, the layoffs target its 350,000 corporate staff, signalling a significant recalibration of its white-collar operations.

It was reported that the job cuts were delivered via email, underscoring the impersonal nature of the transition.

The timing of the announcement—just ahead of the holiday season—has raised eyebrows across the industry.

Analysts suggest Amazon is betting on AI to offset seasonal labour demands and long-term cost pressures. However, this risks reputational fallout and internal morale issues.

Structural challenges

Critics argue that the scale of the layoffs reflects deeper structural challenges, including overhiring during the pandemic and a growing reliance on technology to solve human-centred problems.

Others see it as a bellwether for the wider tech sector, where AI is increasingly viewed as both a productivity boon and a disruptive force.

As Amazon reshapes its workforce for an AI-driven future, questions remain about the social and ethical implications of such rapid automation.

For now, the company appears resolute: leaner, faster, and more algorithmically efficient—even if it means leaving tens of thousands behind in the process.

But, AI is also creating job opportunities in other areas.