A quiet but consequential shift is taking place across the global technology landscape: quantum computing is no longer a distant scientific ambition but an emerging commercial reality.

A new wave of breakthroughs is accelerating timelines, and data‑centre operators — already strained by the explosive growth of AI workloads — are being forced to rethink their infrastructure from the ground up.

The latest reporting highlights how this ‘quantum moment’ is reshaping priorities across the sector.

Advancements in Quantum computing

For years, quantum computing has been framed as a long‑term bet, with practical applications perpetually a decade away. That narrative is now being challenged.

Advances in qubit stability, error‑correction techniques and *photonic architectures are pushing the field closer to machines capable of solving commercially meaningful problems.

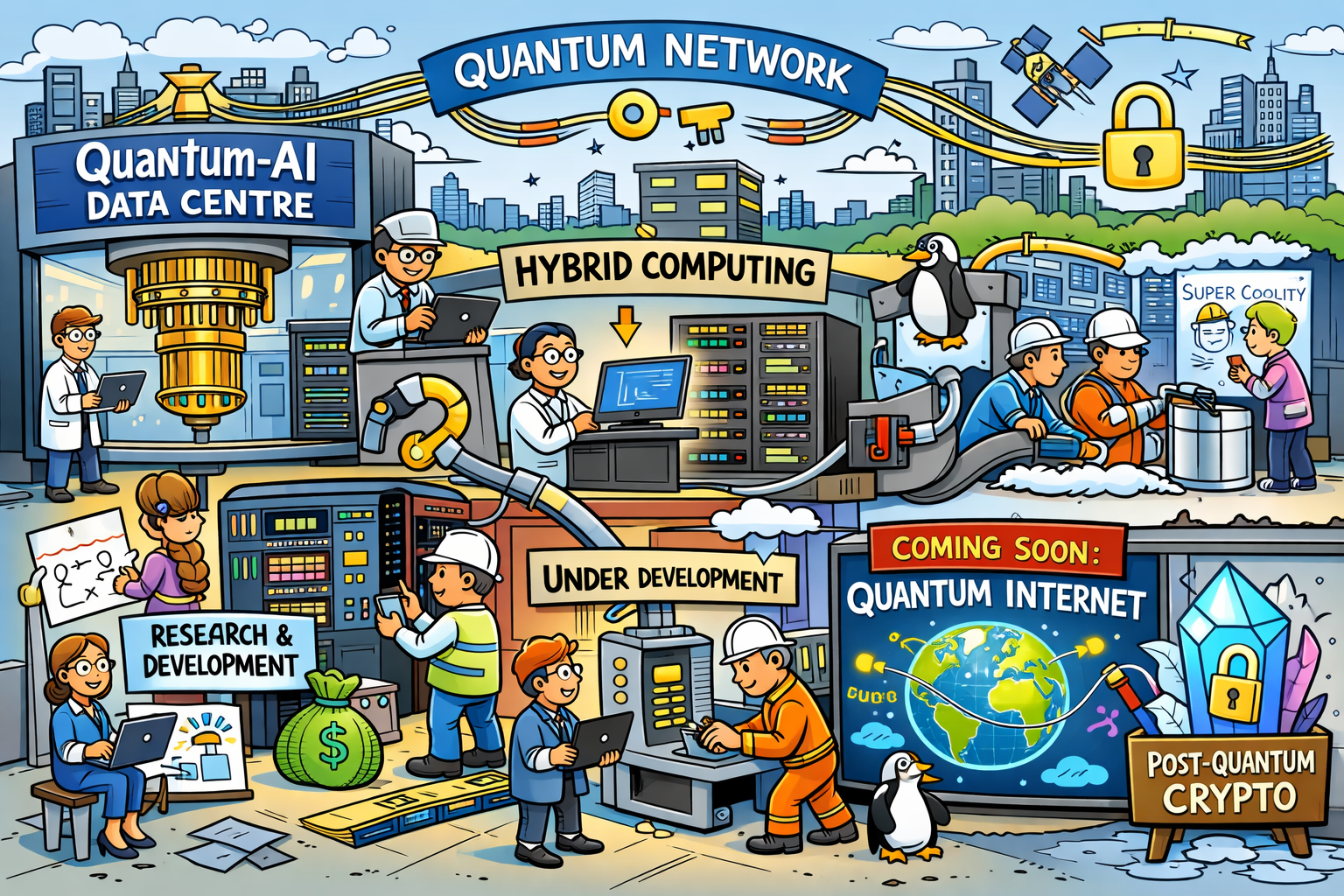

Industry leaders increasingly argue that hybrid quantum–classical systems will begin appearing inside data centres before the end of the decade, creating a new class of high‑value workloads.

This shift is happening at a time when data centres are already under unprecedented strain. The rapid adoption of generative AI has driven demand for power, cooling and specialised silicon to levels few operators anticipated.

Layered complexity

Quantum computing adds a new layer of complexity: these machines require ultra‑stable environments, extreme cooling and highly specialised networking.

As a result, data‑centre design is entering a new phase, with operators exploring everything from cryogenic‑ready layouts to quantum‑secure communication links.

The strategic implications are significant. Hyperscalers are positioning themselves early, investing in quantum‑safe encryption, photonic interconnects and experimental quantum modules that can be slotted into existing facilities.

Objective

The goal is to ensure that when quantum hardware becomes commercially viable, the supporting infrastructure is already in place.

This mirrors the early days of cloud computing, when capacity was built ahead of demand — a gamble that ultimately paid off.

Yet uncertainty remains. Some analysts caution that full‑scale commercialisation could still be decades away, pointing to slow revenue growth and persistent engineering challenges.

Even so, the direction of travel is clear: quantum computing is moving out of the lab and into the strategic planning of the world’s largest data‑centre operators.

If AI defined the last wave of infrastructure investment, quantum may define the next. And for an industry already racing to keep up, the clock has started ticking.

Explainer

What are Photonic Architectures?

Photonic architectures in quantum computing refer to systems that use light particles (photons) as the fundamental units of quantum information — instead of electrons or superconducting circuits.

These architectures are gaining traction because photons offer several unique advantages:

Key Features of Photonic Quantum Architectures

| Feature | Description |

|---|---|

| Qubits via photons | Quantum bits are encoded in properties of light, such as polarisation or phase. |

| Room-temperature operation | Unlike superconducting systems, photonic setups often don’t require cryogenic cooling. |

| Low noise and decoherence | Photons are less prone to environmental interference, improving stability. |

| Modularity and scalability | Photonic systems can be built using modular optical components, ideal for scaling. |